Light up My Life!

Product Dancing LED Lights

Why not add a splash of color to spaces in the home? I needed to brighten up a dark area under the loft in my study. For many years, I owned a Philips LED spotlight that projected a pool of any color that the user dialed in. That beautiful lamp inspired me to program an LED strip to display many colorful patterns.

I used a WS2812B LED strip. This model has individually addressable LEDs. My strip had 120 LEDs and was 2 meters in length. I paired it with an ESP32 microcontroller that I programmed in C/C++.

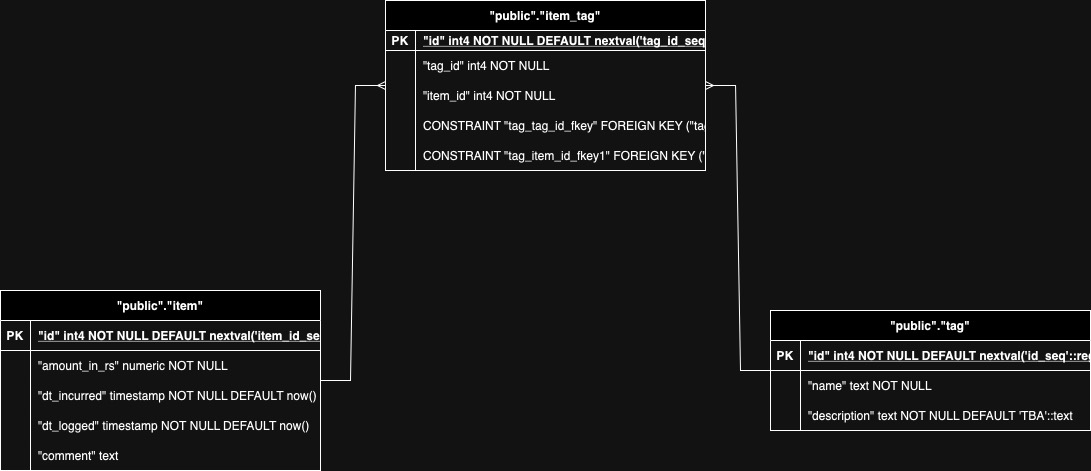

Electrical Schematics The Circuit

In microcontroller projects, there is always the question of managing a variety of power requirements. The ESP32 is a 3.3V microcontroller and the LED strip is 5V. There are three primary patterns for powering up the system as follows:

- Power the ESP32 from mains via USB port using a standard cell phone wall charger (rated 2A). The LED strip draws power from the ESP32 dev board.

- Power the LED strip independently of the ESP32 with a “wall wart”, rated 5V 10A or higher.

- Power the LED strip independently of the ESP32 with a bench-top power supply and inject power into the strip at multiple (at least 2) points from different source channels.

These methods are beautifully illustrated in video by YouTuber and maker Chris Maher.

I had planned to use two strips with 120 LEDs each. I opted for a “wall wart” rated 5V 5A, acknowledging this might be an underpowered solution. Here is the schematic in EasyEDA.

Digital Schematics The Code

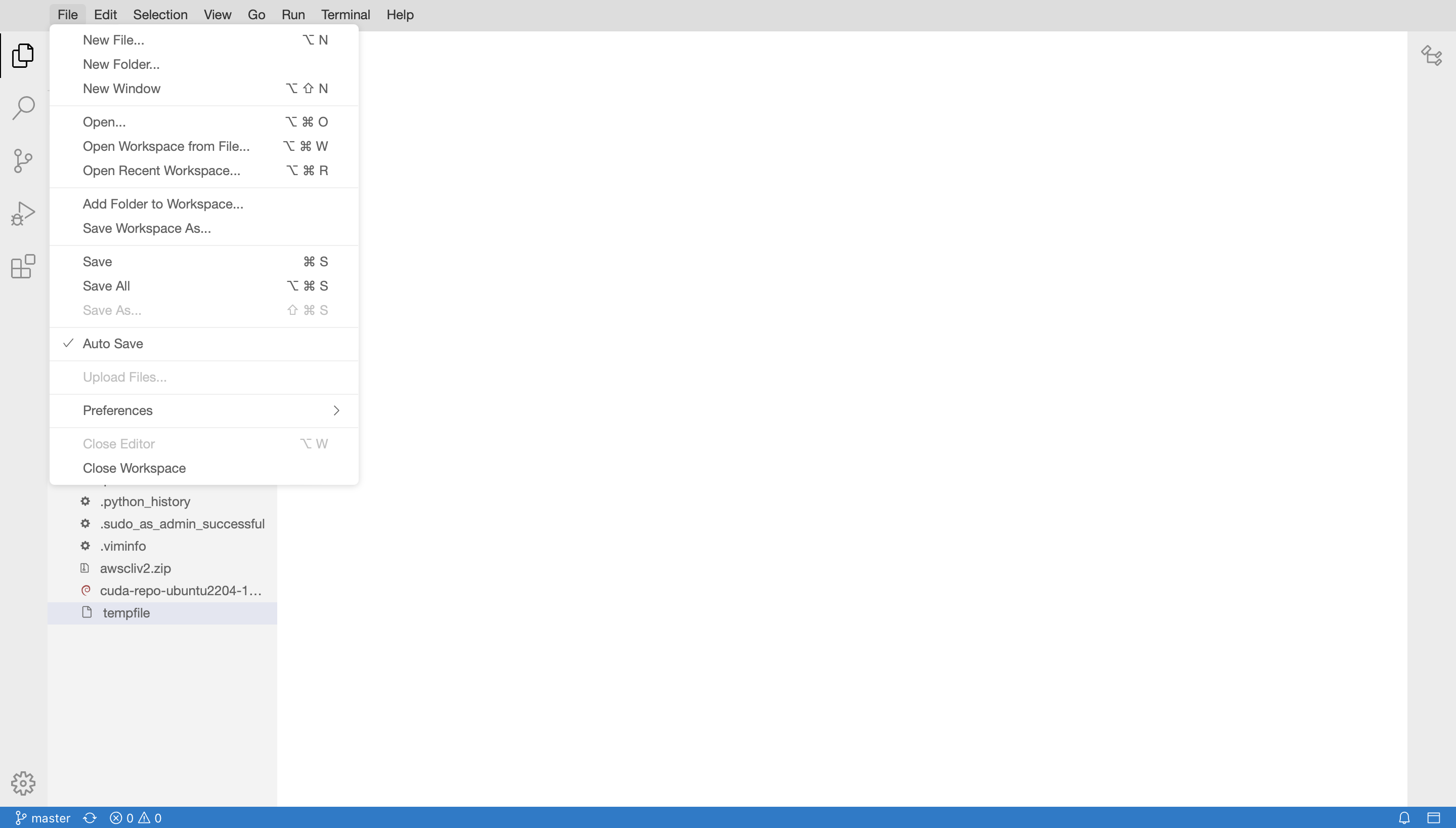

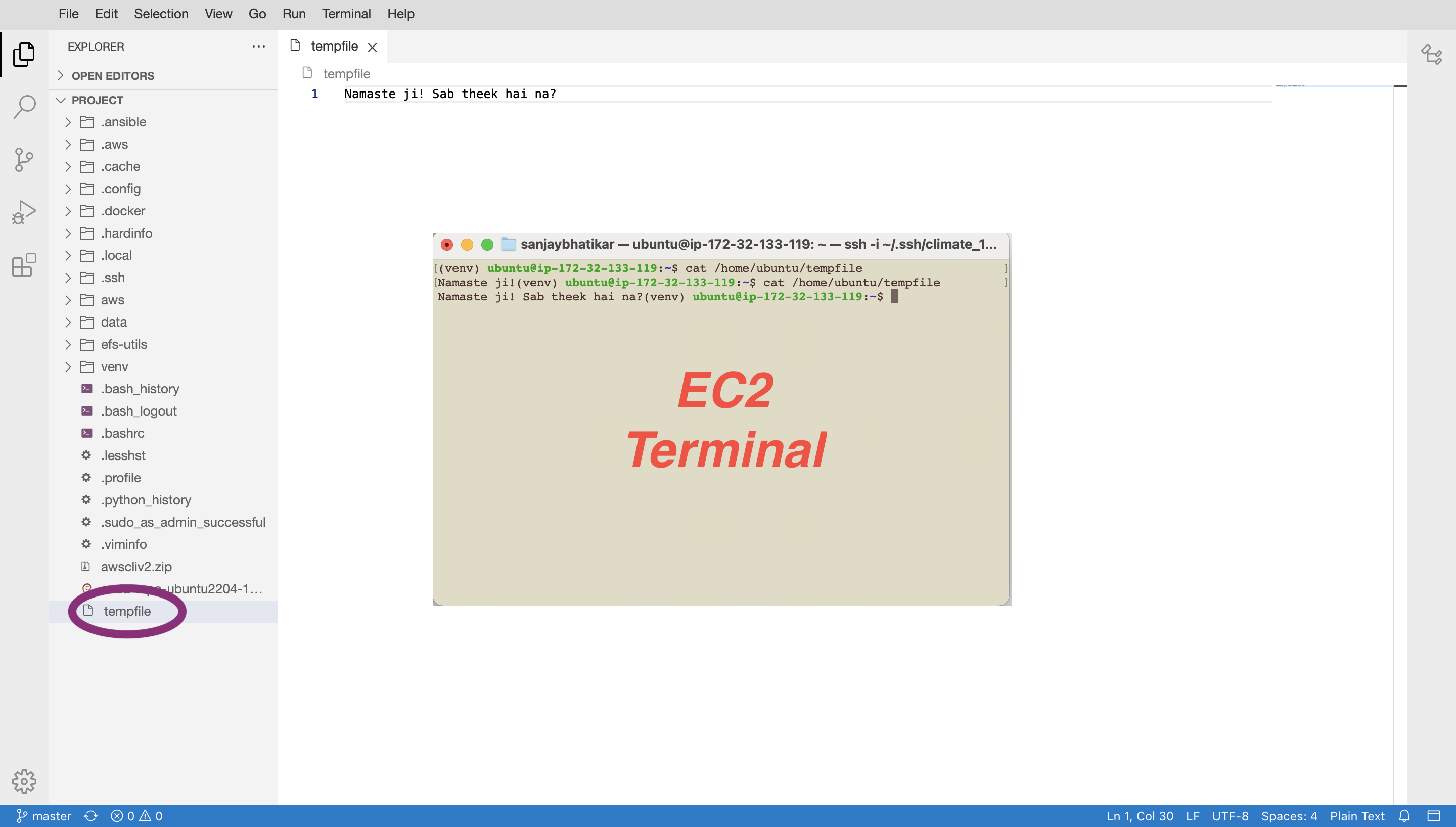

Please find the code in FastColors repo on Github. I use PlatformIO in VSCode and programmed the ESP32 board in Arduino framework. Further, I use the FastLED library to control each individually addressable LED.

Set Up:

I have set up the LED strips in mirror mode to display synchronized patterns. Each strip is controlled by a GPIO pin on the ESP32. I have used GPIO#16 and GPIO#17.

Two important concepts to understand the operation are as follows:

- Class

FastLED: It is the digital twin of a LED strip and associates a data pin on the ESP32 with color information. The data pin is a GPIO pin that is physically wired to the data pin of one LED strip. The color information is an array declared asCRGB leds[NUM_LEDS]that holds the color of each LED in the strip. The array sizeNUM_LEDScorrespond to the number of LEDs addressed via the data pin. When mirroring, the c0lors are synchronized so the same array can be used across multiple strips. - Palette and Blending: In FastLED, a palette is a collection of 16 colors. A color is obtained by indexing into a palette with numbers in range 0-255. The 16 explicit palette entries are spread evenly across the 0-255 range, and the intermediate values are RGB-interpolated between adjacent explicit entries. FastLED provides a selection of palettes as well as the ability to customize palettes. Further, a selection on blending techniques is provided for interpolation.

Our setup code creates digital twin of each LED strip in mirroring mode.

Server:

The server has two methods in a forever-while loop. These are as follows:

CycleThroughColorPalettes(): Rotates through a selection of color palettes on a timer. The current palette is set here and changed at regular intervals in the specified order.FillLEDsFromPaletteColors(): Updates the array with color information using the current palette and the currentstartIndexpassed via arg.colorIndex. The function will color the LEDs near-instantaneously, indexing into the current palette, starting from thecolorIndexpassed.

In the forever-while loop, we update the color information at a set frequency determined by UPDATES_PER_SECOND. The startIndex is incremented on each iteration. The net effect is like picking out the last crayon in a box and sticking it back in first place- the colors shift giving the impression of running lights.

Note that the function CycleThroughColorPalettes() counts off the seconds using a secondsMarker . This item is declared as static variable so it retains value between successive function calls. (Neat, huh?!) In this way, we can change the current palette at regular intervals, thus cycling through palettes.

Packaging Putting It Together

In schematics such as circuit diagram, the project looks much simpler than it is. The devil is in details!

ESP32

WS2812B LED Strip

Breakout Board

Wago Connectors

Power Supply

CCTV Camera Box

Design elements are described below.

Design

WAGO 221 blocks

These provide power distribution points inside the box.

CCTV Camera box

Serves as project box housing ESP32 and electrical junctions.

Screw Terminals

Each of these provides a quick-connect point for an LED strip.

MCU is powered up and wired

The ESP32 dev board is mounted on a breakout board. the 5V and GND pins are used to power up the device and GPIO pins #16 and #17 are used as data pins.

A custom harness is fabricated for ease of connections

The WS2812B LED strip features a JST connector. A custom harness is fabricated to adapt the connector to a screw terminal block. Once the Lights and project box are placed in position, the 3-slotted screw terminal on LED strip and the similar 3-slotted screw terminal on the box are connected with ferrule terminated connecting wires cut to correct length.

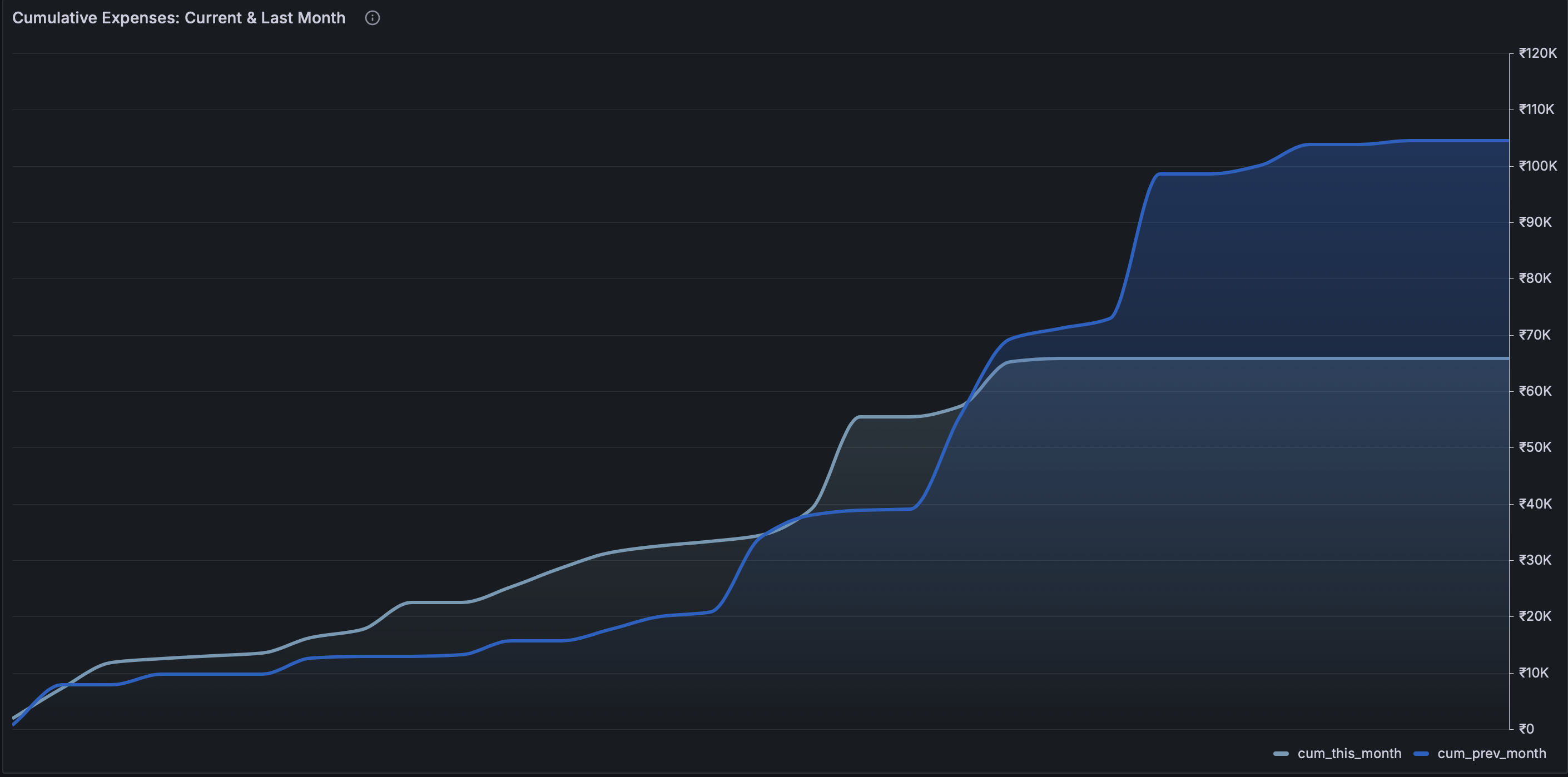

Conclusion Key Takeaways

The project sets about design standards for working with individually addressable LED lights. These include design patterns as follows:

- Make junctions for power distribution in the project box with WAGO connectors,

- Place the ESP on breakout board concealing junctions on the back side,

- Use screw terminals cut to the right size (i.e with right no. of slots) to terminate modular components and run ferrule terminated wires cut to size to make connections once modules are placed in position.

This addresses many issues that come up during implementation of a concept in production.