Access One Docker Container From Another in Microservices Architecture

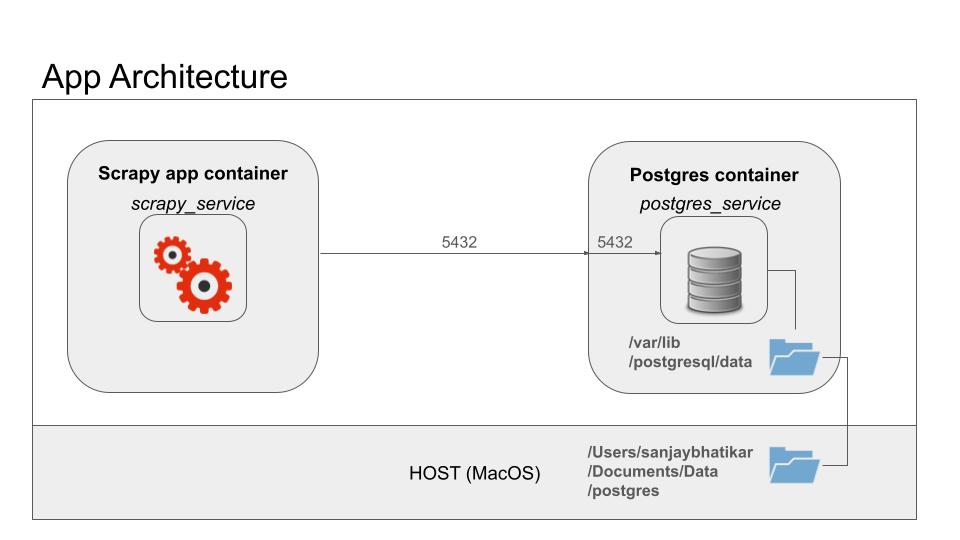

Suppose I have two docker containers on a host machine- an app running in one container, requiring the use of database running in another container. The architecture is as shown in the figure.

In the figure, the Postgres container is named ‘postgres_service’ and is based on official postgres image on docker. The data reside on a shared volume with local host. In this way, data are persisted even after container is removed.

The app container is named ‘scrapy_service’ and is based on an image created starting from official Python 3.9 base image for linux. The application code implements a web-crawler that scrapes financial news websites.

The web-scraper puts data into the postgres database. How to access postgres?

On the host machine, the postgres service is accessible at ‘localhost’ on port 5432. However, this will not work from inside the app container where ‘localhost’ is self-referential.

Solution? We create a docker network and connect both containers to it.

Create docker network and connect postgres container to it. Inspect and verify.

Spin up app’s container with connection to the network.

Create Docker Network Connect Postgres container to the network

docker create network scrappy-netcreates network named scrappy-net.docker network connect scrappy-net postgres_serviceconnects the (running) postgres container to the network.docker network inspect scrappy-netshows the network and what’s on it.

We now have a network ready to accept connections and exchange messages with other containers. Docker will do the DNS lookup with container name.

Spinup App Container Link app to Postgres container

docker build -t scrapy-app .builds the image named scrapy-app. The project directory must have dockerfile and requirements manifest. The entry-point that launches spider isscrapy crawl spidermoneyorscrapy crawl spidermint.docker run –-name scrapy_service –-network scrappy-net -e DB_HOST=postgres_service scrapy-appspins up the container with connection to the network. It launches the crawler as per the entry-point spec. The container exits when the job is done. Thereafter, it can be re-run asdocker start scrapy_servicewith persistent network connection.docker logs scrapy_service > /Users/sanjaybhatikar/Documents/tempt.txt 2>&1saves the app’s strreamed output on stderr and stdout to temp text file for inspection.